Intelligent Fire Calorimetry Driven by Computer Vision

By: Zilong Wang, PhD

(Supervisor: Dr. Xinyan Huang, The Hong Kong Polytechnic University)

This project was funded by the SFPE Foundation Student Research Grant

View full PDF here

Introduction

Fire accidents, whether in the wild or in our homes and buildings, pose a serious threat to our safety and well-being. In the United States alone, fire departments responded to around 1.5 million fires in 2022. These fires led to the tragic loss of 3,790 lives and left 13,250 people injured. The property damage caused by these fires amounted to a staggering $18 billion [1].

In real fire scenarios, the power of fire, known as the fire's heat release rate (HRR) or power (measured in megawatts, MW), is a key indicator of the fire's scale and danger. It is widely used in fire design and emergency rescue strategies. For example, to extinguish a sofa fire with a power of 1 MW, it requires at least 24 liters per minute of water flow. Therefore, a commonly used 300 liters per minute water hose is enough to extinguish a fully ignited room [2].

However, if an entire floor is burning or a tanker truck is on fire, how big is the fire? Currently, conventional technologies cannot measure the size of a fire in real time. Firefighters can only estimate the size and development of the fire based on their experience, for instance by visually assessing the size and color of the smoke.

The lack of accurate fire information can lead to inadequate fire rescue preparations, delaying the timing of extinguishment and rescue. It may also result in misjudging the danger of the fire scenario, causing unnecessary casualties. To protect firefighters' safety and improve firefighting decisions, real-time identification of the fire scenario is needed to better understand its development.

In fire experiments, we often capture videos of flames and smoke, which contain a wealth of information about fire behavior and characteristics, such as flame size, height, color, brightness, oscillation frequency, and their spatiotemporal evolution patterns. In turn, in-depth analysis of flame images can provide various information about fire development.

Today, computer vision methods have been widely used to identify hidden fire information and predict the development of fire scenarios, making real-time processing and identification of fire images possible. This article will discuss real-time fire calorimetry based on flame images using computer vision.

Fire Calorimetry Using Flame Images

With the advancement of computer technology, AI-based object detection has been widely applied in our daily lives. Through this technology, computers can think and learn like humans, telling us what objects they see, where they are, and what they are doing. Take Apple's Face ID technology as an example: once you've enrolled your facial information into your phone, you can unlock it, make payments, and perform other tasks using your face. Even if you're wearing glasses, have a beard, or are an identical twin, your phone can continuously learn your facial features, remember you, and recognize you.

So, if we train AI models to continuously learn the characteristics of flame in different fires, could we use AI to recognize fire just like facial recognition, and then predict the intensity of fires based on the flame images?

To achieve real-time fire calorimetry in real fire scenarios, we proposed a method based on deep learning and flame images [3]. This method selected over 100 different fire test scenarios from the National Institute of Standards and Technology (NIST) fire calorimetry database (FCD) [4], including fires involving paper boxes, trash bins, various types of furniture, and standard wood pallets (Fig.1). During the experiments, cameras recorded the burning process while oxygen consumption calorimeters measured the real-time heat release rate of the fire. By matching flame images with fire calorimetry data, we trained a fire HRR identification model and successfully achieved image-based fire calorimetry.

Figure 1: Typical burning items in the NIST Fire Calorimetry Database,

(a) Trash Can, (b) Paper Box, (c) Laptop Cart, and (d) Wood Pallets

After training, we put the model to the test by simulating random vehicle fires and Christmas tree fires (Fig.2). The results showed that the model is capable of accurately measuring the heat release rate (HRR) of fires in real-time. This work provides a simple and convenient way to measure the fire HRR and shows great potential in future smart firefighting applications.

Figure 2: Evolution of fire HRR in real fire events by model quantification,

(a) Vehicle Fire, and (b) Christmas Tree Fire

Automatic Fire Calorimetry Under Varying Fire Distance

Although we have developed calorimetric methods based on flame images, the proposed methods are limited to identifying fire development in fixed locations and cannot achieve continuous monitoring and accurate positioning of the fire source. When the camera position changes, we cannot accurately identify the fire HRR since the scale of the flame on the image will change with distance. In scenarios where the camera is moving, such as handheld cameras or drone cameras, can we apply computer vision to monitor, locate, and measure the fire source?

Figure 3 (a) Experimental setup for distance measurement, (b) binocular camera used for image capture, (c) examples of chessboard image for camera calibration, and (d) different burners.

To address the distance issues in moving fire scenarios, we conducted research on fire source identification and localization in fire scenarios to rescale the flame image [5]. We used portable stereo cameras to capture real-time fire video streams, and then fed these videos frame by frame into pre-trained computer vision models to achieve real-time monitoring, localization, and measurement of the fire (Fig.3).

By analyzing the position of the fire source in the images, we can determine the distance between the camera and the fire source in real time to scale the flame image to the scale we need. Then, using a pre-trained deep learning model [3], we can output the instantaneous fire HRR in real time while on the move. This model can automate the measurement of fire heat release in a short period of time. The stereo vision system has an error of less than 20% in measuring the distance between the camera and the fire source, flame height, and power (Fig.4).

Figure 4: Evolution of pool fire, (a) Raw video, (b) Fire detection, (c) Flame height, and (d) fire calorimetry.

This AI-based fire calorimetry method provides an efficient and feasible way to measure the distance, flame height, and size of the fire in real time. It is worth mentioning that this technology has great potential in firefighting operations and decision-making. By obtaining key information about the fire source in real time, firefighters can make more accurate response measures, effectively reducing the harm caused by the fire.

APP Development

In order to make it easier for everyone to use and test the models we trained, we developed the Fire Vigilance Pocket Edition application (FV Pocket, http://firevigilance.firelabxy.com/), which is a web-based tool designed for real-time fire monitoring and intelligent analysis of fire data. The system includes features like fire detection [5], fire segmentation [6], and fire calorimetry [3], all aimed at enhancing fire vigilance and safety (Fig.5). Using advanced deep learning technology, the application can identify, and measure fires based on images, providing important information to help make decisions quickly.

Figure 5: The demonstration of FV pocket using the paper box fire.

The webpage is still under development, but we believe it will be ready for use soon. Please be patient and stay tuned!

Summary

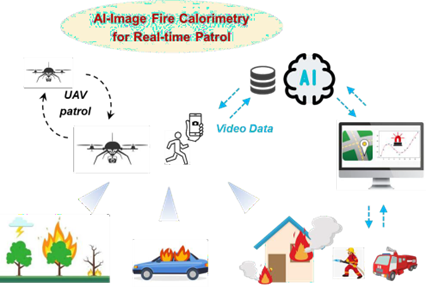

In the field of smart firefighting, computer vision fire calorimetry technology is gradually showing its huge potential. Instead of relying on complex detection equipment installed in advance, this technology uses regular cameras to estimate the size of a fire (see Fig. 6). It can be used with any video device in the fire scenario, from drones to handheld cameras, for real-time fire monitoring and measurement.

Figure 6: Automatic fire distance and power measurements for smart firefighting.

Here's how it works: the camera captures images of the fire scenario, which are then processed in real-time using artificial intelligence and computer vision technology. This allows the system to pinpoint the location, size, and intensity of the fire. Firefighting teams can then receive critical information instantly, helping them optimize their firefighting and rescue efforts, make more accurate decisions, and reduce casualties.

In practical terms, this means that firefighters and firefighting robots can quickly assess how a fire is developing and respond rapidly, all in a way that's easy and convenient.

References

[1] Fire loss in the United States | NFPA Research, (2023). https://www.nfpa.org/education-and- research/research/nfpa-research/fire-statistical-reports/fire-loss-in-the-united-states.

[2] N. Johansson, S. Svensson, Review of the Use of Fire Dynamics Theory in Fire Service Activities, Fire Technol. 55 (2019) 81–103. https://doi.org/10.1007/s10694-018-0774-3.

[3] Z. Wang, T. Zhang, X. Huang, Predicting real-time fire heat release rate by flame images and deep learning, Proc. Combust. Inst. 39 (2022) 4115–4123. https://doi.org/10.1016/j.proci.2022.07.062.

[4] M. Bundy, User’s Guide for Fire Calorimetry Database (FCD), (2020).

[5] Z. Wang, Y. Ding, T. Zhang, X. Huang, Automatic real-time fire distance, size and power measurement driven by stereo camera and deep learning, Fire Saf. J. 140 (2023) 103891. https://doi.org/10.1016/j.firesaf.2023.103891.

[6] Z. Wang, T. Zhang, X. Huang, Explainable deep learning for image-driven fire calorimetry, Appl. Intell. (2023). https://doi.org/10.1007/s10489-023-05231-x.